What is robots.txt? Have you ever heard about it, if not, it is a matter of good news for you today because today I am going to provide some information about robots.txt to you.

If you have a blog or a website, then you must have felt that sometimes all the information we do not want also become public in the Internet, do you know why this happens. Why many of our good contents would not have been indexed after a long time. If you want to know about the secret behind all these things, then what is this article robots.txt, you will have to read it completely, so that you will know about all these things till the end of the article.

Telling search engines that files and folders from cones have to show all the public in the website and what things do not use Robots Metatag for this. But all Search engines I do not know how to read Metataga, so many robots metatag goes unnoticed without reading. The best way for this is that Robots.txt Use of files so that search engines can be easily informed about their website or blog files and folders.

So today I thought that why should you not be given complete information about what is robots.txt so that you do not have any problem in understanding it even further. Then what do you start the delay and know that this Robots.txt What is it and what is its benefit.

What is robots.txt

Robots.

By the way, following robots.txt is not a mandatory for search engines, but they definitely pay attention to it and do not visit the mentioned pages and folders in it. Accordingly, robots.txt is very important. Therefore, it is very important to keep it in Main Directory, so that the search engine makes it easy to find.

The thing to note here is that if we do not implement this file in the right place, then search engines will feel that perhaps you have not included robot.txt file so that your site's pages may not be index.

Therefore, there is a lot of importance of this small file, if it is not used properly then it can also reduce the ranking of your website. Therefore, it is very important to have good knowledge about it.

How does it work?

If any search engines or web spiders have come for the first time to your website or blog, then they first crawl your robot.txt file because it contains all the information about your website, what things do not crawl and what to do. And she indexes your guided pages, so that your indexed pages are displayed in Search Engine Results.

Robots.txt Files can prove to be very beneficial for you:

How to make robots.txt file

If you have not yet made robots.txt file in your website or blog, then you should make it very soon because it is going to prove to be very beneficial for you later. To make this you have to follow some instructions:

- First of all, make a text file and save it by the name of robots.txt. For this you can use notepad if you use Windows then or if you use Macs and then save it according to the text-delimited file.

- Now upload it to the root directory of your website. Which is a root level folder and is also called “htdocs” and it is appear after your domain name.

- If you use subdomains then you need to make different robots.txt file for all subdomain.

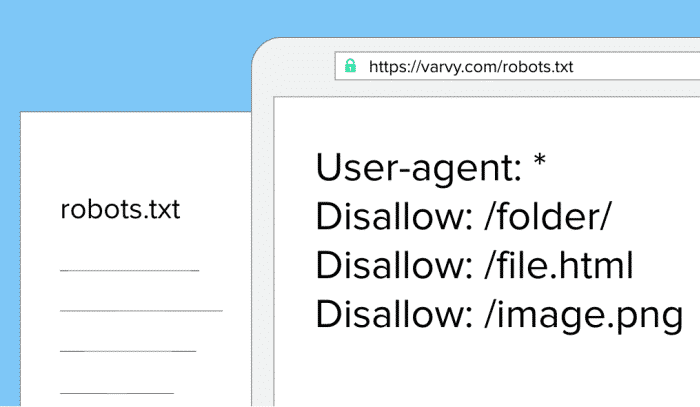

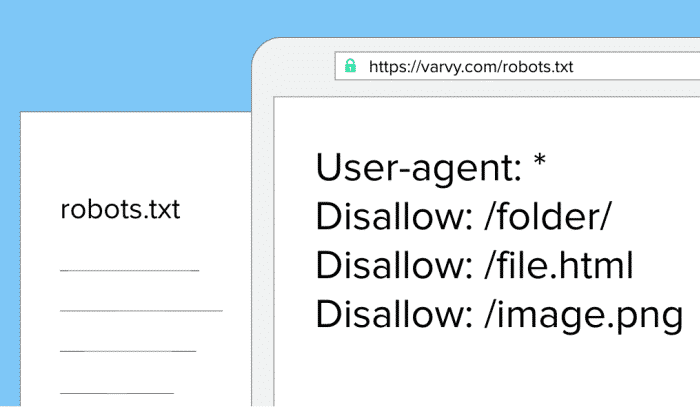

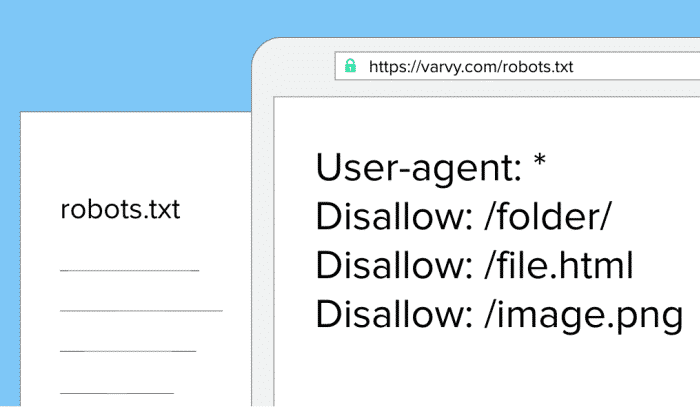

What is syntax of robots.txt

In robots.txt we use some syntax about which we are very important to know.

, User -Gent: Those robots that follow all these rules and in which they are applicable (EG “Googlebot,” etc.)

, Disallow: To use this means that those pages that you do not want can access the pages with bots. (Here there is a need to write disallow per files)

, Noindex: Using this, Search Engine will not index your pages that you do not want to be indexed.

• all User -Gent/Disallow Group To separate, one should use a blank line, but note here that there should not be any blank line between two groups (User -Gent line and the last disallow should not be gap.

, Hash Symbol ( #) The use can be used to give comments within a robots.txt file, where all the things which will have a symbol of # will be ignored. They are mainly used for Whole Lines or End of Lines.

• Directories and FileNames case-sensitive There are: “Private”, “Private”, and “Private“All these are different for search engines.

Let's understand this with the help of example. Here I have written about him.

• Joe Robot here “Googlebot” It is not written in any disallowed statement so that it is free to go anywhere

• All the site has been closed here where “MSNBOT” has been used

• There is no permission to see all Robots (other than googlebot)

/logs or logs.php.

User -Gent: Googlebot

Disallow:

User -Gent: MSNBOT

Disallow: /

# Block all robots from TMP and Logs Directories

User -Gent: *

Disallow: /TMP /

Disallow: /Logs # For Directories and Files Called Logs

Advantages of using robots.txt

By the way, it has given many faes to use robots.txt, but I have told about some very important faes here or about which everyone should know about it.

- Your sensitive information can be kept private with the use of robots.txt.

- With the help of robots.txt, “canonicalization” problems can be kept away or multiplecanonical“URLs can also be kept. Forget this problem, it is also called “Duplicate Content” Problem.

- With this you can also help Google Bots Index to pages To

What if we do not use robots.txt file?

If we do not use any robots.txt file, then there is no restriction on Seach Engines, where to crawl and where not, we can index all things that they can find in your website.

These are also all for many websites, but if we talk about some good practice then we should use robots.txt file because it makes it easier for search engines to index your pages, and they do not need to go to all pages again and again.

What did you learn today

I hope you guys Robots.txt what Gave complete information about it and I hope you guys have understood about robots.txt. I have passed all the readers that you guys also share this information in your neighborhood, relatives, your friends, which will make awareness between us and it will benefit everyone a lot. I need the support of your people so that I can reach you more new information.

It has always been my endeavor that I should always help my readers or readers from all sides, if you have any kind of doubt, then you can ask me without any kind. I will definitely try to solve those doubts.

What is this article what is Robots.Txt, how did you tell us by writing a comment so that we too get to learn something from your thoughts and improve something.